Generating photo-realistic images and videos is a challenge at the heart of computer graphics. Over the last couple of decades, we have come a long way from the pixelated graphics of Doom 1993 to high-quality renders like that of Red Dead Redemption 2 below. If you have played some of these modern games, you must have wondered how a beautiful and photo-realistic scene like the one below is rendered on your screen in real-time.

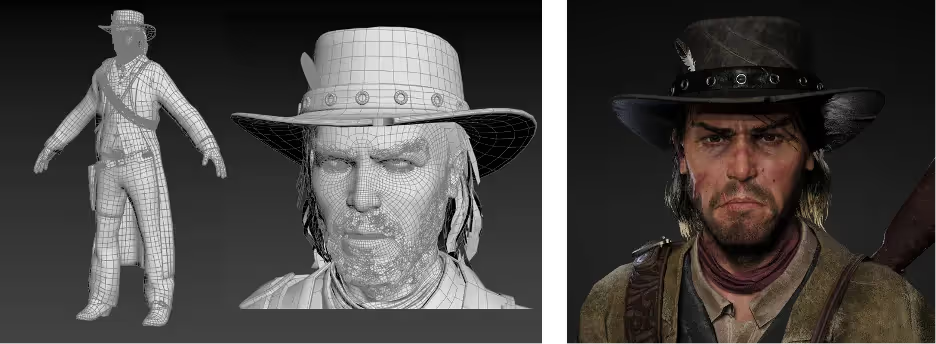

The game environment is a 3D model which is made up of millions of triangles/quadrilaterals that determine the shape, color, and appearance of the objects in that environment. Below is an example of how the main character in the above game might have been modeled:

When you play the game, the scene is “rendered” on your computer screen similar to how a photograph is formed in a digital camera. Game assets (i.e., 3D models) are projected on a virtual camera through a complicated process that approximates the physics of the image formation. All this computation happens in real-time on the GPU of your system as you keep moving through the game environment.

When designing the game environment, these game assets, ranging from small objects like bottles to large-scale scenes like an entire city, are created in 3D modeling software like Blender. In order to simulate realism, these assets have to be of high quality and need to possess intricate details like dents on bottles or rust on pipes. Not surprisingly, creating such environments requires sizable collaboration from human writers, artists, and developers working together using a variety of software tools.

But what if you can generate a synthetic 3D gaming environment using a neural network that has been trained on just a few images? This idea is extremely powerful in the creation of immersive 3D content that not only has high-quality details but also dimensional accuracy. We at Preimage think that its implications on inspections, gaming, films or development of AR/VR applications are immense. We will explore how Neural Rendering works and its use-cases below.

So What is Neural Rendering?

The main idea behind neural rendering is to combine the classical computer graphics with the recent advances in AI. The process involves training a neural network with images or video of a scene and getting the AI model to “learn” and predict how the image would have looked like if it was captured from a different angle. That means by capturing just a few photos of a scene you can render an image from any position and orientation.

Once the AI model has “learnt” the scene it also facilitates other applications like changing the lighting, object shapes, and even modeling how the scene changes through time (allowing for a sort of time travel through the scene).

Real-World Applications

Industrial Inspections

Asset-heavy companies especially in industries like infrastructure, oil & gas, telecom, or mining carry out periodic inspection of their operational sites for continuous assessment and maintenance. It helps them catch problems early and reduce the chances of an accident, malfunction, or breakdown.

Conventionally, such industrial inspections are carried out in two ways:

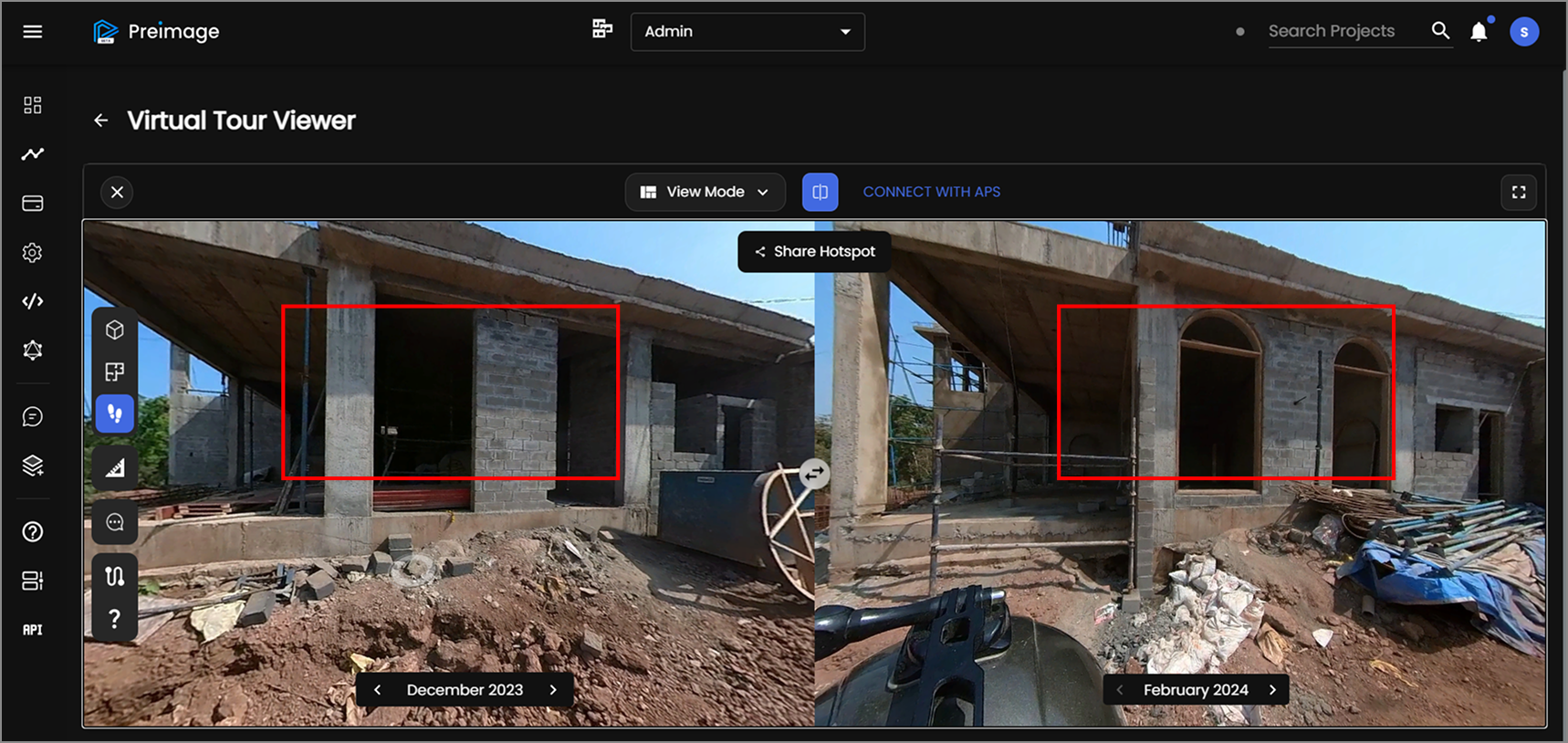

- By creating 3D models using photos. Photos of the assets (telecom tower, refineries etc.) are captured using drones or DSLR and are then converted into 3D models using a 3D reconstruction software. These 3D models are dimensionally accurate with respect to the real-world and hence allow precise measurements and annotations. However, they fall short in terms of capturing fine details and texture-less regions like plain white walls. Moreover, generating an extremely high-resolution 3D model from photos is expensive and time-consuming with current software tools.

- Using just images and videos. Images and videos capture high-resolution details of scenes including texture-less regions. However, they have two downsides: (a) making measurements is extremely tricky with images (e.g., measuring the angle at which the telecom tower is pointing with respect to ground), and (b) an object annotated in image 1 is not automatically annotated in image 2. These drawbacks make the process of inspection extremely manual and intensive.

Neural rendering offers the perfect hybrid of the above two approaches. It allows one to view the scene from various angles with high-resolution details like images, and since it’s a 3D representation, it facilitates accurate measurements along with consistent annotations of objects across views.

Photo Tourism, Virtual Flythroughs & Education

Since Neural Rendering allows you to view a scene from any position or orientation, it has the potential to allow users to virtually fly/drive/walk through it as in an interactive video game. This provides a much more immersive experience to the user than what can be possible with recorded videos or images.

Consequently, this opens opportunities in many areas, including photo tourism. Imagine being able to walk through the galleries of Angkor Wat with photo-realistic views of the inscriptions and carvings. The same concept can also be used in real estate, hospitality, and event management industries for virtual demos of properties, which can be such a convenience for end-users.

The use-cases for immersive educational walkthroughs in museums and in education curriculums are also endless.

Relocalization

Visual relocalization is the process of estimating where a photo was captured in a 3D scene. With Neural Rendering, as discussed above, one can estimate how an image would look like if viewed from a particular angle. The process can also be flipped, i.e., given an image, the AI model can predict the position at which that image was taken.

This has several applications including visual answering of questions in Augmented Reality (for e.g., “Show me some good cafes around”), investigative journalism (determining where a photograph or video was taken), and even for geotagging unstructured image collections on the internet.

Challenges: Scale and Editing

Neural Rendering is a rapidly developing field, and even with promising signs of what it can accomplish, there still are major hurdles yet to be overcome.

Scalability is one of the problems that a lot of such AI models struggle with. An AI model that reconstructs small scenes (e.g., telecom tower) really well, might struggle to represent medium-to-large size areas (city scale). Many solutions have been proposed to counter this problem, ranging from hierarchical space partitioning to multiresolution hash input encoding.

Another challenge faced is interpreting the weights learned by the AI model into formats that are comprehensible for humans. Editing scenes produced by Neural Rendering is not fully understood yet, and thus makes editing one part of the scene without affecting the quality of the rest, a tough challenge.

Needs Active Discussion on Fake Content and Privacy

As the lines blur between what is real and what is synthetic, questions about fake content and privacy obviously emerge. It is already becoming hard for humans to differentiate between real and synthetically generated faces, which has led to the whole debate around deepfake technology. Obama totally gave an introduction at MIT Intro to Deep Learning, we believe him, it’s a video!

With neural scene editing tech getting better, similar techniques could be applied to generate realistic-looking edited environments. Methods to overcome these problems include standards to explicitly require synthetic content to be labelled as such.

Another major concern is privacy preservation, for example automatic blurring of human faces, number plates, or other personal identifiable information from neural renders. Such issues need to be talked about more and should be satisfactorily solved before this technology goes mainstream. This is important for building trustworthy AI systems.

What is Preimage Solving?

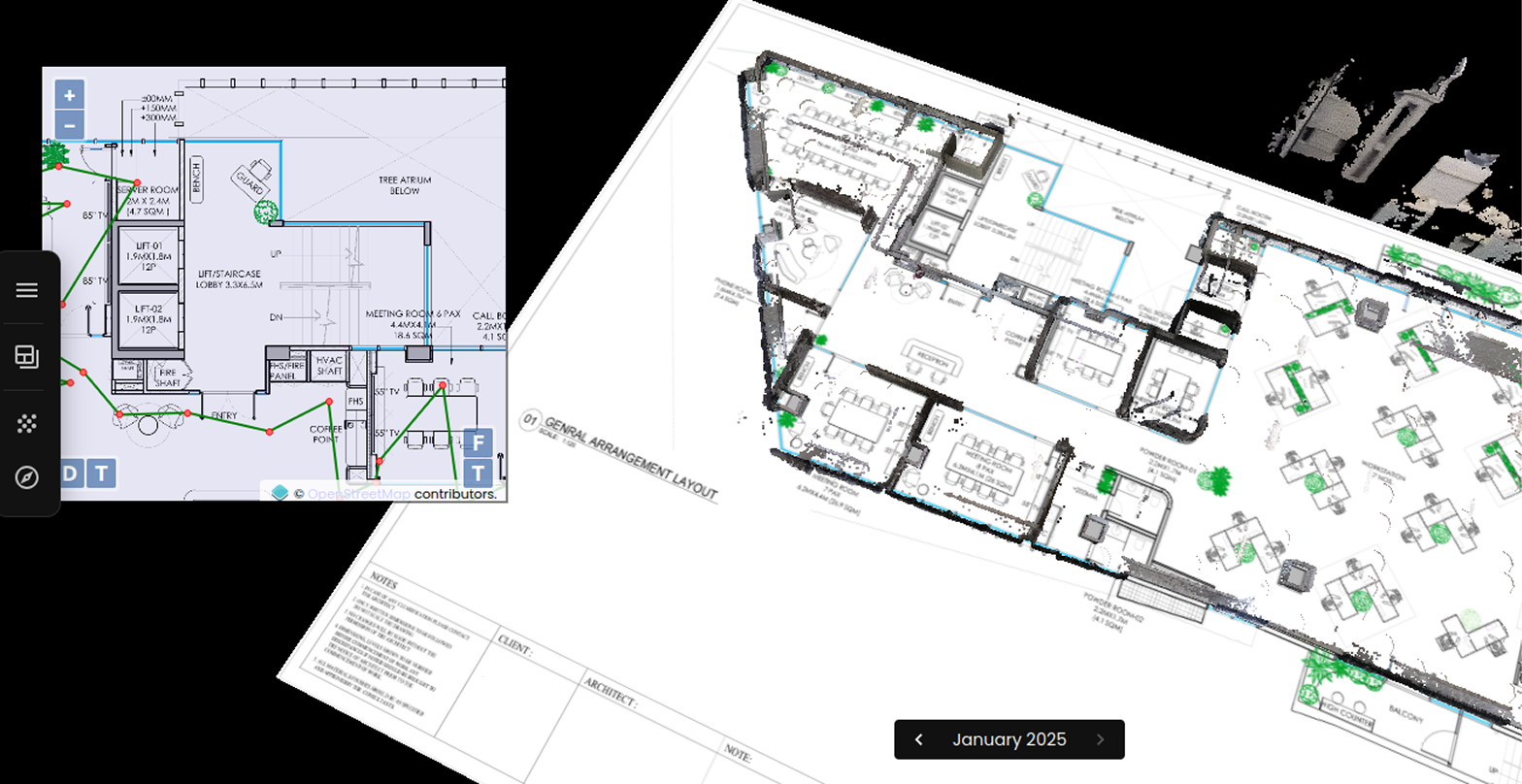

Preimage is building a next-gen 3D reconstruction pipeline that is optimized for the cloud. We’ve built upon the major advances that the field of 3D computer vision has made over the past decade and engineered a super-fast, scalable, and integrable 3D reconstruction platform.

Our pipeline can reconstruct small tabletop assets taken from a DSLR to large cities captured from drone into high-quality and accurate 3D models at unprecedented speeds. We’ll be launching our software in Q2 of 2022.

In the meantime, you can try out our 3D point cloud classification, DSM and DTM generation workflow on our web application (no installation needed) by uploading your RGB point clouds.

Have more applications of neural rendering in mind? Got any thoughts on this blog post? We would love to hear from you. Shoot us an e-mail at hello@preimage.ai or drop us a message through our contact form.

.webp)